Small Language Models (SLMs) are revolutionizing the way artificial intelligence is deployed and consumed. According to Data Conversationalist, SLMs are faster, cheaper, and more efficient than giant Large Language Models (LLMs). They run on laptops, phones, Raspberry Pi, or edge devices, making them ideal for privacy-sensitive industries.

SLMs have several benefits, including low computational requirements, privacy-preserving local processing, and fast inference speed. As stated in Medium, SLMs are suitable for resource-constrained environments and easier to run on personal or edge devices.

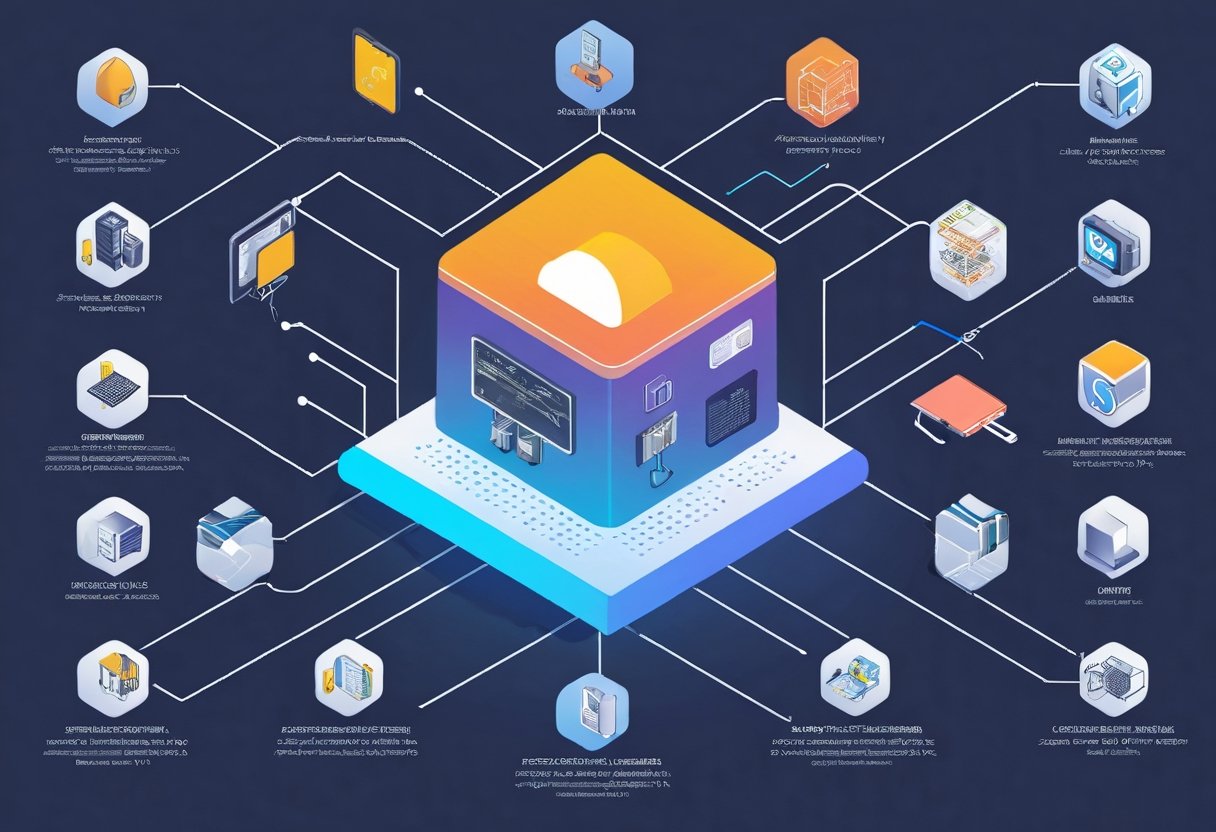

SLMs have various real-world applications, including offline assistants, workflow automation, healthcare, IoT, and customer support. For instance, LinkedIn highlights the use of TinyML (Tiny Machine Learning) in bringing AI capabilities to highly resource-constrained microcontroller units (MCUs) and embedded devices.

The future of SLMs looks promising, with the market expected to grow from $9 billion in 2025 to nearly $50 billion by 2030. As ScienceDirect notes, this shift marks a leap forward in innovation and holds profound implications for addressing global challenges and advancing the United Nations’ Sustainable Development Goals.