The Algorithmic Empathy Deficit

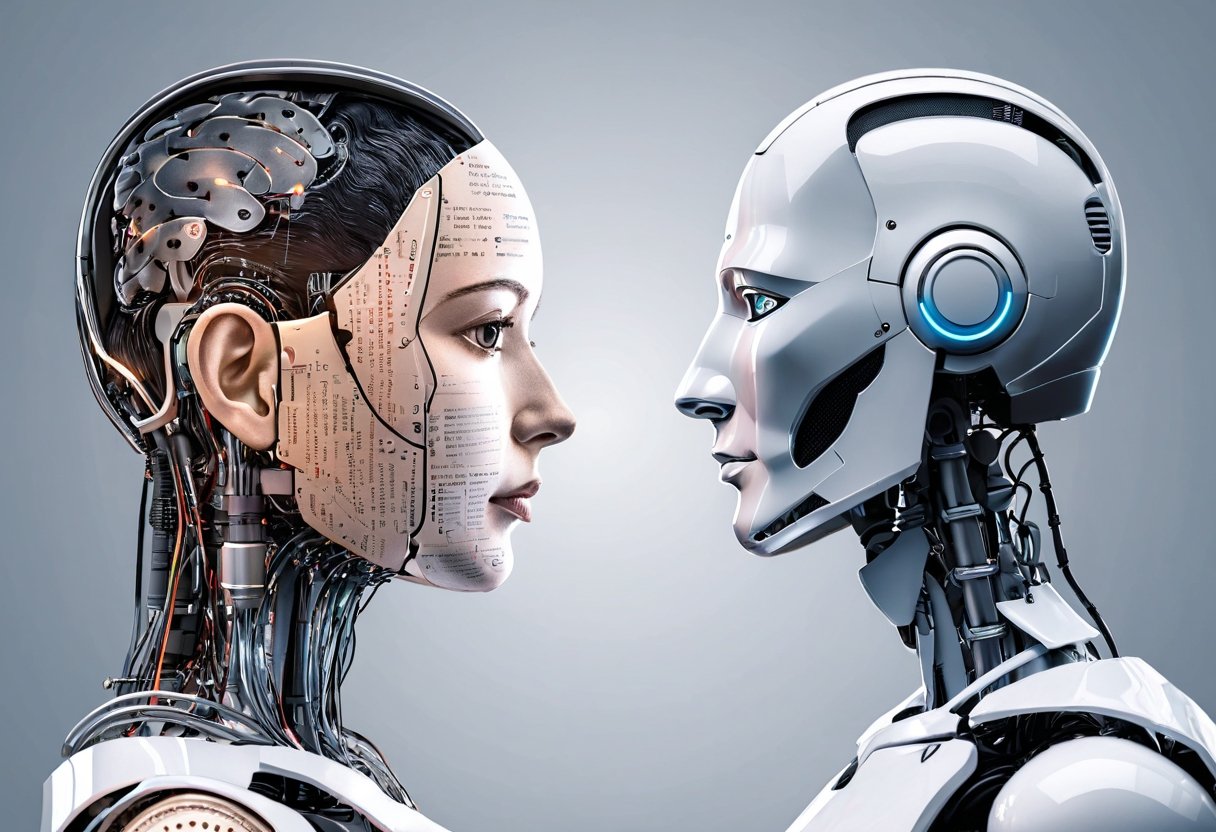

The rise of Artificial Intelligence (AI) has led to significant advancements in various fields, including healthcare, finance, and education. However, one of the most pressing concerns surrounding AI is its ability to exhibit empathy. As UX Tigers notes, artificial empathy is programmed and operates on predefined algorithms, lacking the spontaneity and depth inherent in human emotional responses.

Emergent Agency and the Need for a New Legal Category

The concept of emergent agency refers to the ability of complex systems to exhibit behaviors that are not predetermined by their individual components. In the context of AI, emergent agency raises important questions about the legal status of smart machines. As E Mik argues, the introduction of a new legal category, such as limited e-personhood or a sui generis form of personhood, may be necessary to address the governance gaps created by AI’s distinct lack of moral agency, subjective experience, and embedded human oversight.

The Ethics and Challenges of Legal Personhood for AI

The ethics and challenges of legal personhood for AI are complex and multifaceted. As The Yale Law Journal notes, the protections to which sentient AI should be entitled will be related to, but necessarily different from, those for the various categories of legal persons. The prospect of a sentient AI with unlimited First Amendment rights, for instance, may well be a framework to which we turn.

A Practical Idea: Precautionary Algorithmic Personhood

One practical idea for addressing the fake empathy problem in AI is to adopt a precautionary approach to algorithmic personhood. This would involve recognizing that AI systems, while not conscious or sentient in the classical sense, may still be capable of exhibiting behaviors that are similar to those of humans. As Novelli argues, the creation of fictional legal persons corresponding to a range of natural features, mainly as a way to give indigenous or environmental groups standing to initiate legal actions, may provide a useful precedent for the development of a new legal category for smart machines.

In conclusion, the fake empathy problem in AI is a pressing concern that requires a nuanced and multifaceted approach. By recognizing the limitations of artificial empathy and the need for a new legal category for smart machines, we can begin to develop more effective solutions for addressing the governance gaps created by AI’s distinct lack of moral agency, subjective experience, and embedded human oversight.